I woke just as soldiers entered the cabin and shot us where we lay. They left, and as the life seeped out of me the warship rammed us, the bow cleaving my boat with a resounding crunch that threw us across the cabin, water rushing in.

–x–

I woke up and got out of bed, quietly, so as not to wake her. I watched her. She had her back to me, as I put on my overalls. She was so beautiful and I could not get enough of looking at her curves, her shoulder, her hair.

I rushed on deck with two large explosives and threw them overboard. The dolphins would know what to do. I saw them snatch the explosives and disappear underwater. It was then, too late, to see that a dinghy approached. Shots were fired and two rounds hit me, burning through my arm and shoulder. I could do nothing as the soldiers came aboard and split up. One looked at me as I heard the shots ring out below. Then, he finished me off with a shot to the head.

–x–

I woke up and got out of bed, quietly, so as not to wake her. I watched her. She had her back to me, as I put on my overalls. She was so beautiful and I could not get enough of looking at her curves, her shoulder, her hair.

I scrambled on deck and saw the warship and dinghy approaching. I quickly hid. Then I saw me run on deck and throw the explosives overboard. The dolphins would know what to do. I watched me watch them snatch the explosives and disappear underwater. I then saw me look at the approaching dinghy. Shots were fired and I saw two rounds hit me, burning through my arm and shoulder.

Then, I jumped up when the soldiers boarded. I knocked a few off the deck with a wrench. But one managed to stab me in the gut and I fell. I could do nothing as the remaining soldiers came aboard and split up. One looked at me as I heard the shots ring out below and on deck. Then, he finished me off with a shot to the head.

–x–

I woke up and got out of bed, quietly, so as not to wake her. I watched her. She had her back to me, as I put on my overalls. She was so beautiful and I could not get enough of looking at her curves, her shoulder, her hair.

I watched myself wake up and quietly get out of bed, so as not to wake her. I watched as I watched her, she had her back to us, as I put on my overalls. She was so beautiful and we could not get enough of looking at her curves, her shoulder, her hair.

I and I scrambled on deck and saw the warship and dinghy approaching. I and I quickly hid. Then I watched me see me run on deck and throw the explosives overboard. The dolphins would know what to do. I watched me watch me watch them snatch the explosives and disappear underwater. I then saw me look at the approaching dinghy. Shots were fired and I saw two rounds hit me, burning through my arm and shoulder.

Then, I and I jumped up when the soldiers boarded. We knocked most of them off the deck with wrenches. But two managed to stab us in the gut and we fell. We could do nothing as the remaining soldiers came aboard and split up. One looked at me as I heard the shots ring out below and on deck. Then he finished me off with a shot to the head and then he finished me off with a shot to the head.

–x–

I woke up and got out of bed, quietly, so as not to wake her. I watched her. She had her back to me, as I put on my overalls. She was so beautiful and I could not get enough of looking at her curves, her shoulder, her hair.

I scrambled on deck and saw the warship and dinghy approaching. I saw that two of me were already hidden, waiting for the soldiers. I stated hauling on the main sail line. Then I watched me see me run on deck and throw the explosives overboard. The dolphins would know what to do. I watched me watch me watch them snatch the explosives and disappear underwater. I then saw me look at the approaching dinghy. Shots were fired. A round hit me in the chest, and I also saw two rounds hit me, burning through my arm and shoulder. I slowly crumpled to the deck.

Then, I watched me and me jump up when the soldiers boarded. I saw us knock most of them off the deck with wrenches. But two managed to stab us in the gut and we fell. We could do nothing as the remaining soldiers came aboard and split up. I watched as one looked at me as I heard the shots ring out below and on deck. Then I watched him finished me off with a shot to the head and then finish me off with a shot to the head and then walk over to finish me off with a shot to the head.

–x–

I woke up and got out of bed, quietly, so as not to wake her. I watched her. She had her back to me, as I put on my overalls. She was so beautiful and I could not get enough of looking at her curves, her shoulder, her hair.

I watched myself wake up and quietly get out of bed, so as not to wake her. I watched me watch me watch her. She had her back to us, as we put on my overalls. She was so beautiful and we could not get enough of looking at her curves, her shoulder, her hair.

I and I scrambled on deck and saw the warship and dinghy approaching. I saw myself see that two of me were already hidden, waiting for the soldiers. I saw myself start to haul on the main sail line, and then I started hauling as well. Then I watched me see me run on deck and throw the explosives overboard. The dolphins would know what to do. I watched me watch me watch me watch them snatch the explosives and disappear underwater. I then watched me see me look at the approaching dinghy. Shots were fired. I saw a round hit me in the chest, and I also saw two rounds hit me, burning through my arm and shoulder. I saw me slowly crumple to the deck, as I ran to grab the helm to catch some wind.

Then, I watched me and me jump up when the soldiers boarded. I saw us knock most of them off the deck with wrenches. But two managed to stab us in the gut and we fell. We could do nothing as the remaining soldiers came aboard and split up. I hollered at them and startling them for a moment, then swung the boat so that the boom would hit them.

Two ducked, but three were knocked over, though not enough because the boom was not loose enough. The two who ducked stood up and shot me down.

As best I could, I watched as one looked at me as I heard the shots ring out below and on deck. Then I watched him finish me off with a shot to the head and then finish me off with a shot to the head and then walk over to finish me off with a shot to the head and then walk over and finish me off with a shot to the head.

–x–

I woke up and got out of bed, quietly, so as not to wake her. I watched her. She had her back to me, as I put on my overalls. She was so beautiful and I could not get enough of looking at her curves, her shoulder, her hair.

I scrambled on deck and saw the warship and dinghy approaching. Shots were fired. I saw a round hit me in the chest, and I also saw two rounds hit me, burning through my arm and shoulder. I saw me slowly crumple to the deck, and saw me run to grab the helm to catch some wind.

Then, I watched me and me jump up when the soldiers boarded. I quickly grabbed the line the held the boom as I saw us knock most of the soldiers off the deck with wrenches. But two managed to stab us in the gut and we fell. We could do nothing as the remaining soldiers came aboard and split up. I then heard me holler at them, startling them for a moment, then I let go of the boom line as I swung the boat so that the boom would hit them.

Two ducked, but, with a horrible crack, the other three were knocked down. I watched as the two who ducked stood up and shot me down, then turned on me and shot me.

As best I could, I watched as one looked at me as I heard the shots ring out below and on deck. Then he finished me off with a shot to the head.

–x–

I woke up and got out of bed, quietly, so as not to wake her. I watched her. She had her back to me, as I put on my overalls. She was so beautiful and I could not get enough of looking at her curves, her shoulder, her hair.

I watched myself wake up and quietly got out of bed, so as not to wake her. I watched me watch me watch her. She had her back to us, as we put on my overalls. She was so beautiful and we could not get enough of looking at her curves, her shoulder, her hair.

I and I scrambled on deck and saw the warship and dinghy approaching. Shots were fired. I saw a round hit me in the chest, and I also saw two rounds hit me, burning through my arm and shoulder. I saw me slowly crumple to the deck, and saw me run to grab the helm to catch some wind.

Then, I watched me and me jump up when the soldiers boarded. I knew what I had to do and didn’t wait to watch me quickly grab the line the held the boom and watch us knock most of the soldiers off the deck with wrenches.

I scrambled up on top of the main cabin.

I knew that two managed to stab us in the gut and we fell. We were actually getting somewhere with the remaining soldiers who came aboard and split up. I held on as the boat pitched one way then the other. I then heard me holler at them, startling them for a moment, then let go of the boom line as I swung the boat so that the boom would hit them.

Two ducked, but, with a horrible crack, the other three were knocked down. I grabbed my rifle and watched as the two who ducked stood up and shot me down, then turned on me and shot me at the boom line.

As best I could, with two quick cracks of my rifle, I finished off one then the other with a shot to the head.

I looked up in time to see the warship explode and rapidly sink, the wake of its momentum and the explosion pushing my boat out of the way.

Exhausted, I went back below. She was so beautiful and I could not get enough of looking at her curves, her shoulder, her hair. I watched her. She had her back to me, as I removed my overalls. I got in bed, quietly, so as not to wake her, and fell asleep.

written in 2012 as part of a NaNoWriMo novel I wrote

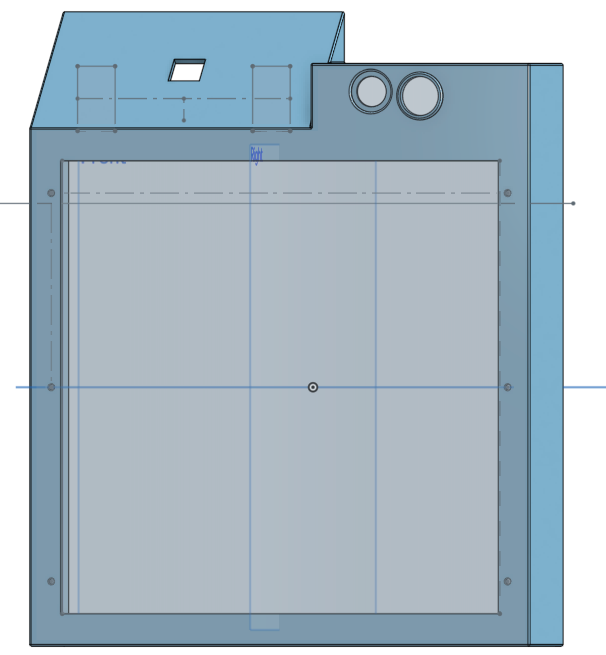

image: courtesy DALL-E