Back in 2008, I was the Editor-in-Chief for Nokia Conversations, the main Nokia blog. Like a good tech blog back then, in addition to industry news, product reviews, event reporting, we also came up with different mobile-related challenges.

Relevant to this post, we were looking at the next year bringing in a billion new mobile users, mostly in emerging markets in Africa, South Asia, and South East Asia. Nokia had released the 1100-series of entry-level text-voice-only phones, such as the Nokia 1209. They were about €50 or less, built robustly, and meant to sell in the 100Ms, as indeed some in the series did.

Dumbphones rule?

I got my first Nokia smartphone at the end of 2001. As I was on the Series 60 (the OS in the smartphones) marketing team, I used a long stream of the latest smartphones, even thru the next two roles I had at Nokia.

But the whole thing with emerging markets always got me excited. We’d speak about SMS services for Kerala fisherman to sell their fish before hitting the short, of Kenyan services to find drug counterfeits, and of emerging mobile payment systems bringing micro-loans and remittances to millions for the first time.

Club 1100, anyone?

Therefore, I wondered what it was to live in that text-voice-only world. After years with a smartphone, I’d forgotten what it was like.

As a sort of challenge to myself, I decided to see if I could go 30 days using one of these entry-level phones. I picked up a 1209, put my smartphone away, hooked into some SMS services, and had an interesting time going smartphoneless.

Same but different?

Unlike in 2008, now that we are in a pervasive smartphone world, the drive to ditch is not driven by nostalgia or empathy, but by a desire to take more control over the lean-forward, two-handed, two-eyes, full-attention devices that smartphones have become.

As I say in a post from 2007:

…there is a distinction between Mobile Computing vs a Mobile Lifestyle.

Mobile Computing is two-hands, two-eyes, lean forward, flat surface, stationary, broad-band, big screen, big keyboard, mouse, multi-window, multi-button.

The Mobile Lifestyle is one-hand, interruptive, back-pocket, walking, in and out of attention, focused (not necessarily simple).

Alison Johnson, from The Verge, is the latest in the spirit of the Club 1100. She tried to use just her Apple Watch.

I ditched my smartphone for a cellular smart watch — here’s how it went | The Verge

As I discovered with the Club 1100 Challenge, even back then there were expectations of fuller connectivity and applications. Fast forward to now, and our world is built even more around the expectation that everyone has a smartphone.

Also, so much of our info is digital – maps, contacts, messages. When I went Club 1100, I printed out maps and contacts (my phone wasn’t connected to migrate contacts). And I had some folks get upset when I wasn’t able to engage, as I used to, with more advanced messaging and such. [Tho I am sure the ‘basic’ phones of today have the key apps needed, such as Google Maps and WhatsApp]

Almost, but no cigar

I realize that Allison was just exploring the idea. She by no means goes cold turkey as I did. She did carry around connected devices and had a smartphone turned off in her bag.

But she was able to learn quite a bit of what being without a smartphone entails. So, kudos there.

Interestingly, mining my old posts, I was reminded that, from using smartwatches also back in the day, I had explored smartwatches as new and potentially innovative surfaces (2013). I was reminded, as well, that I also had posited smartwatches as a way to liberate us from our smartphones (2017), at least once haha.

But as I always say, the current smartwatches are not designed to be independent, but a side-screen, at best. This is partly due to a desire to use the watch as a hook to keep folks on their main device, the smartphone; but I also feel this is partly due to some myopia of smartphone-centric designers not thinking outside the box phone.

How now, you?

Allison only tried 7 days. I can see that being the equivalent of me trying 30 days back in 2008, considering how essential smartphones have become. Like her, I also realized how much planning it takes to go back to a samrphoneless existence. And, like her, I was much more aware of how much, even back then, smartphones have insinuated themselves into our day-to-day (hm, maybe we need to insert ‘smartphones’ into our Maslow hierarchy? where would it fit, tho, haha?).

There are folks making basic phones, locking apps, and the like. But I agree with Allison that we need to make these changes positive, not punishing.

And when I hear of these gimmicks to get folks off smartphones, I ask myself if they are trying to remove something, or seeking to teach folks a positive new (or old) way of navigating the world.

What do you think? Do we need to amputate, or redirect our behavior? Are smartphones the problem or are we?

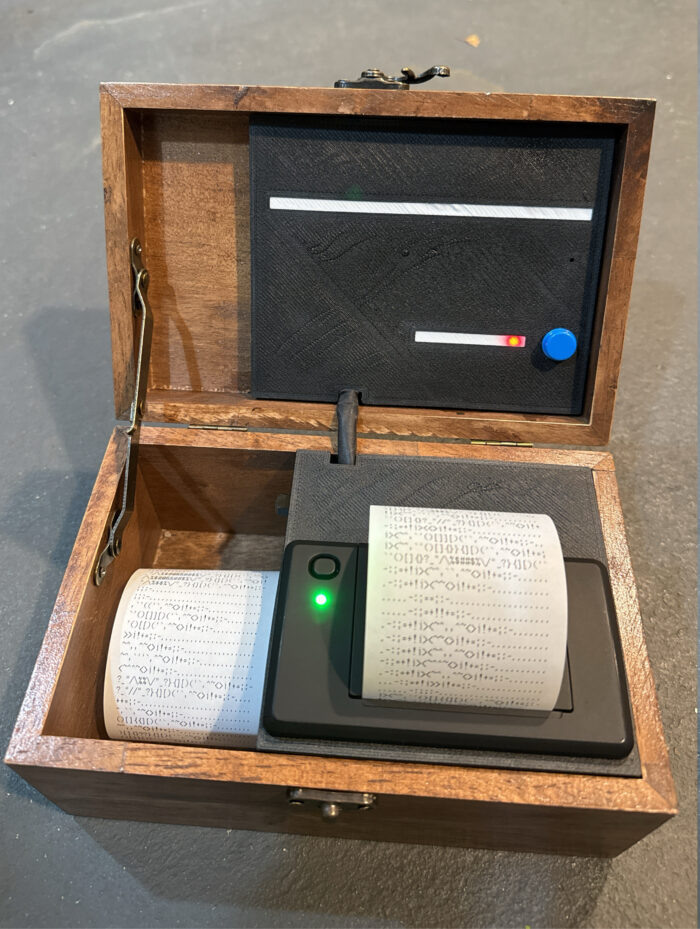

Image from Allison’s article. Go read it.