In a recent video, Seon talked about his struggle with open sourcing his products.

He’s in favor of open sourcing, obviously, and makes most of his software and hardware designs available as open source. From what I can tell from the licenses he uses, the code and designs are free to be used, modified, and sold, though with attribution and share alike.

But he’s concerned with the impact he’s seen that open sourcing his works have had on him.

For starters, he keeps finding folks who don’t read or understand or respect his license. I’ve seen him remind folks on Discord to respect the license when they use his work. I can’t image what happens when folks don’t tell him they’ve used his design and he stumbles upon them, perhaps even on AliExpress.

He also points out the work involved in creating documentation while being time starved. And I get it, part of the reason I don’t have my code and design out there is just that added effort in documentation and clean up for reusability (not that anyone is interested in what I make anyway, and my code is quite ugly and useless, haha).

Mittelmachers

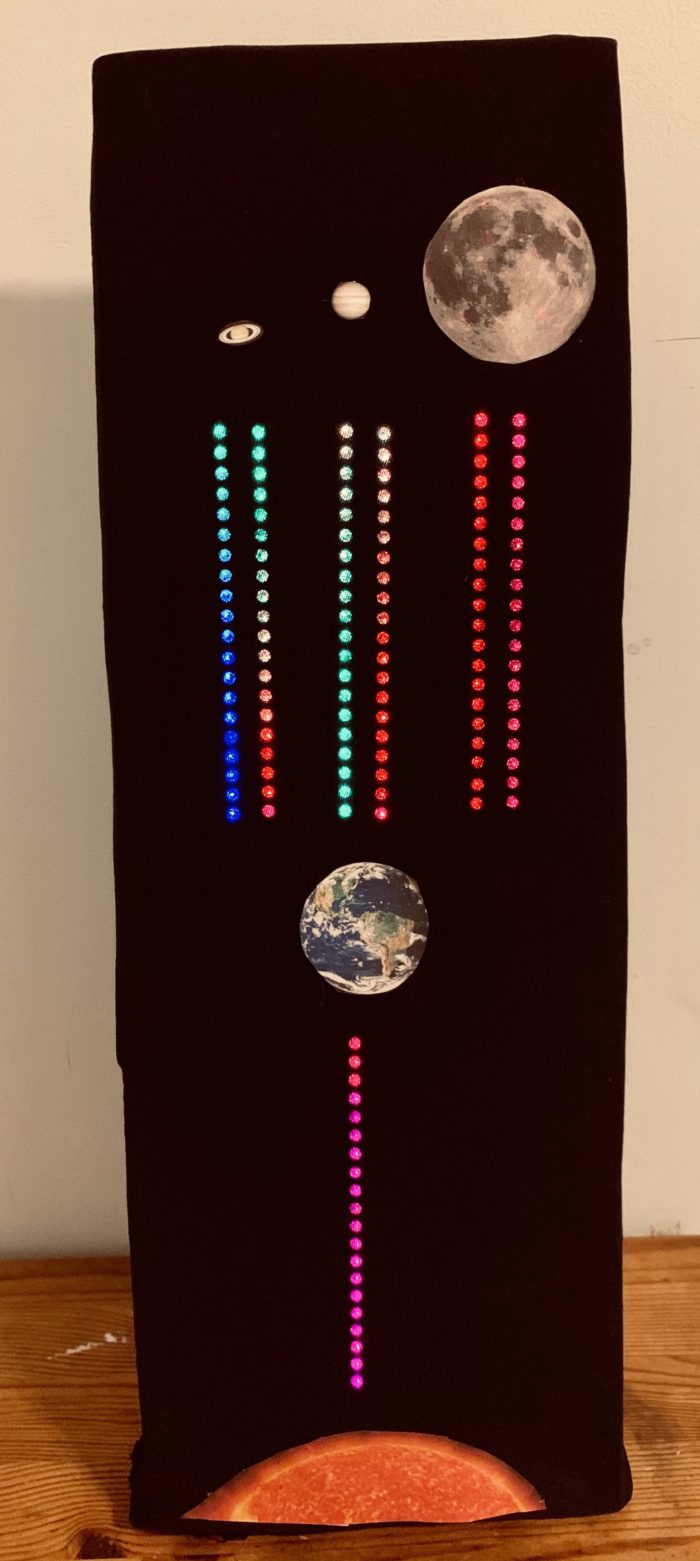

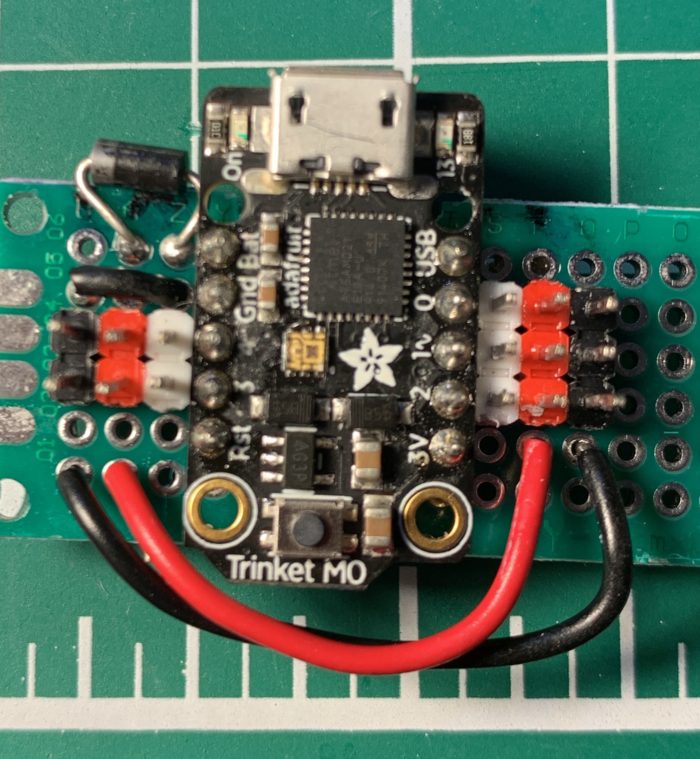

Interestingly, in his discussion, he does make a distinction between tinkerers and big folks with a thriving ecosystem, such as Adafruit. Tinkerers are sharing and not looking to earn from that work, so it’s ok to share and share alike. And folks with a large ecosystem and brand can afford to give their designs away freely, as they have a steady business of also selling things to their audience.

Seon sees himself in the middle somewhere. He talks about making open sourcing decisions based on how it affect his business. And it is sometimes unclear to him the value of open sourcing his creations.

Dropping the BOM

This discussion came up when he was explaining why there were a few of his products he would not open source. A reason might be the effort to make something open source, as I said above, especially for something he’s not necessarily intending to sell. But, also, he brought up his concern around giving away what he is working hard to make money and survive on. He wants to give back to the community, but doesn’t want to make it easy for unscrupulous freeloaders to undercut him competitively in sales.

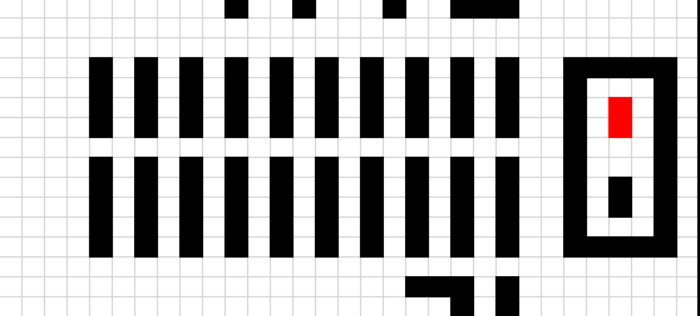

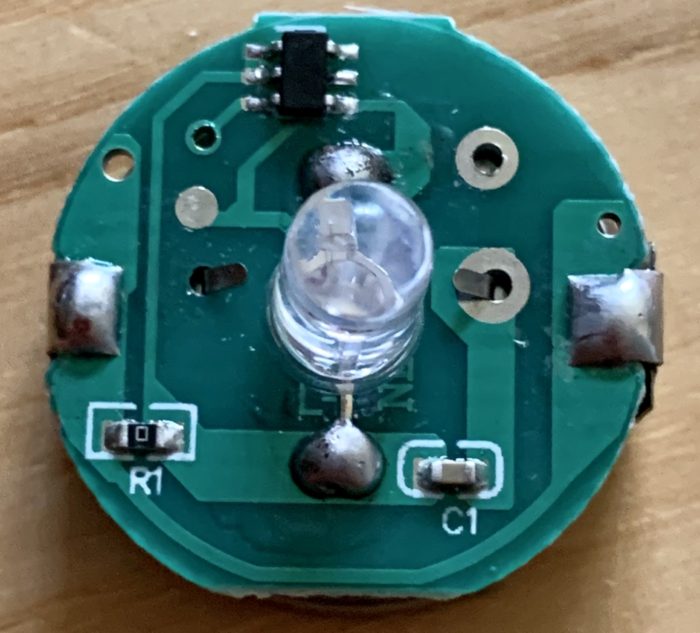

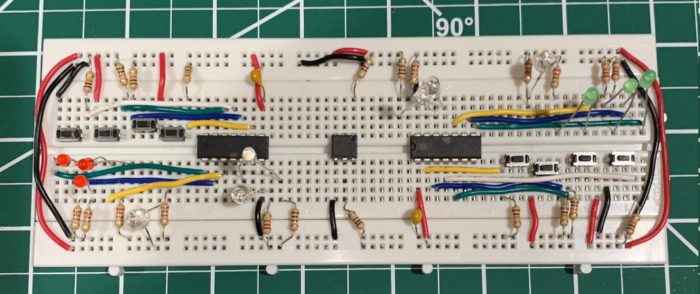

As an example, he talked about why he doesn’t release his BOM or component library. He mentioned how his Gerbers, schematics, and board files are fine to share, but his BOM is too much for him to give away. Yes, he knows that a lot of that info is in the files he shares. But the BOM is basically a shopping list of his components and suppliers, which he feels is a competitive advantage he’s built over years, and giving it away makes it too easy for folks to undercut him.

The business of making

One challenge of going from a tinkerer to one who builds a business on open source hardware is that transition from building a sales stream and being big enough, both in sales and brand, that knockoffs have minimal impact.*

Balancing open sourcing one’s designs and building a book of business has been around for a long time.

And that balance teeters wildly for those who are trying to meaningfully give the community while also trying to protect the business they depend on.

Not an easy balance.

What do you think? How does open sourcing help or hold back early stage hardware makers? How do you balance protecting your business and growing your brand and community?

*Of course, this presumes copycats have negligible impact on sales at Adafruit or Sparkfun or any of the other big maker brands. And I wonder if their path to growth has helped them balance between open and closed, and build resilience against those who might undercut them.

Update, 10 hours later….

Wow, I seemed to have hit a nerve. Very interesting discussion ensued after Seon picked up on my tweet. I think these kinds of discussions are helpful for us to understand the business of making, and the challenges middle manufacturers have.

Here is the tweet if you want to see the thread that ensued.

Image by Nick Fewings